HP ProLiant DL380p G8 is over 12 years old design and its BIOS doesn’t support NVMe disks and to install one, you have to use a PCIe add-on card. So, how do you boot Linux from it?

DL380p does have an internal SD card slot and an internal USB 2.0 connector, so you can boot from them to vacate hard drive bay(s) used by system disk(s) which is usually RAID 1 or mirror pair using two bays out of a total of eight drive bays. SD cards and USB 2.0 drives are however terribly slow and especially so compared to PCIe 3.0 NVMe disks which can be a hundred times faster. It doesn’t however matter much, if you have only the absolute minimum on SD card or USB key drive. Linux /boot partition size is usually only one gigabyte, and it is hard to find so small drives nowadays. And Google Gemini, which I used for help, suggested chainloading Grub bootloaders, the first one on USB key drive and the second on NVMe drive, but that didn’t work out. It would have been faster because Grub is very light, but loading a Linux kernel and an initramfs image file doesn’t add extensively much boot time, especially on a server which is not supposed to be booted very often.

I had two goals:

- To grow my big RAID 5 virtual drive, which had already six biggest possible 2.5” SATA disks, with additional disks up to a total of eight disks.

- Get a faster system drive and in the process also get rid of annoying LVM system and arrange system partitions more sensibly. This wasn’t so important, but an easy to get, additional benefit.

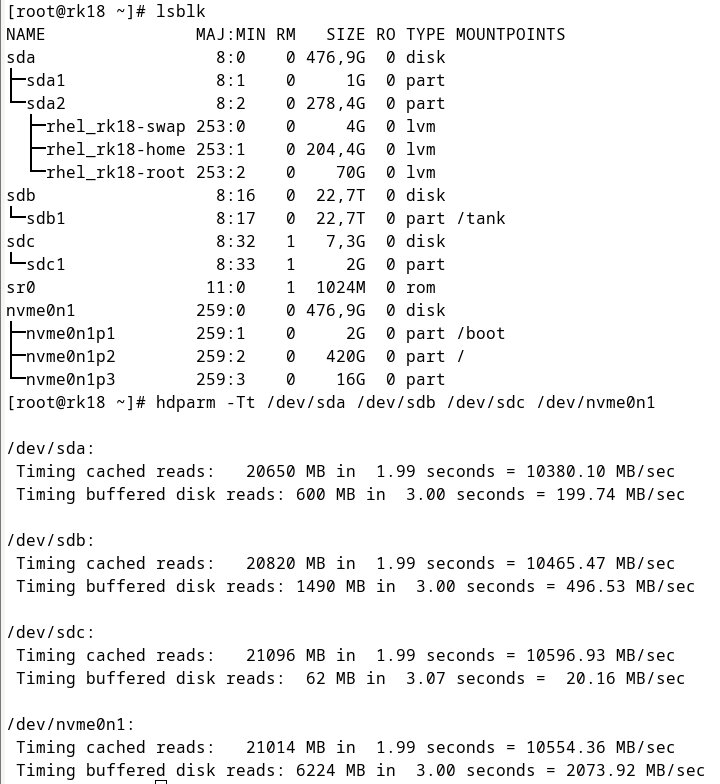

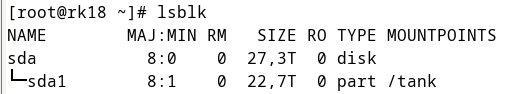

So let’s look at the hardware I have. This is when I still have the old system disks in place.

/dev/sda is RAID 1 or a mirror pair of two cheap 512 GB SATA SSD disks.

/dev/sdb is a RAID 5 array of six 5 TB SATA hard disk drives.

/dev/sdc is a very old 8 GB USB 2.0 key drive.

/dev/nvme0n1 is a cheap 512 GB PCIe 3.0 M.2 NVMe SSD drive.

I tried first on my own, but when it didn’t work out quickly, I asked Gemini for help. My first question was:

How to move RHEL 9 from hard drive with BIOS support to NVMe drive without BIOS support and a tiny USB drive with BIOS support?

The answer included a following grub.cfg file:

# Example grub.cfg for the tiny USB

set default="0"

set timeout=5

menuentry "Boot RHEL 9 from NVMe (UEFI)" {

insmod part_gpt

insmod fat

insmod search_fs_uuid

search --fs-uuid YOUR_ESP_UUID_HERE --set=root # Get this from blkid /dev/nvme0n1p1

chainloader /EFI/redhat/shimx64.efi # Or grubx64.efi if not using secure boot

}I was doubtful and asked a follow-up question:

Same question, but the server is old and has no UEFI. It does however boot from USB drive and Linux has no trouble accessing NVMe drive, once it is booted.

That didn’t change the answer much, so I refined my point with:

Are you sure chainloading GRUB2s works when GRUB2 relies on BIOS and BIOS doesn’t support NVMe in this case?

Gemini thought it had the correct answer and started to hallucinate to convince me. It claimed that the command “insmod nvme” would be valid in grub.cfg, but not needed, as grub loads lots of modules quietly when needed.

- GRUB2’s NVMe Modules (The “Magic”): This is where it gets interesting. Modern GRUB2 (the version used by RHEL 9) includes its own drivers for various hardware, including NVMe. These drivers are not part of the BIOS; they are modules that GRUB2 loads into its own memory space as it initializes.

Chainloading would have also preserved the original grub.cfg file generated by Red Hat modified grub2-mkconfig shell script for the new file system layout on NVMe disk and the rest of the instructions Gemini gave were good, except for the chainloading part. There are a lot of modules in the /boot/grub2/i386-pc directory, the command “ls /boot/grub2/i386-pc/*.mod | wc -l” says there are 280, but no nvme.mod. I however followed the instructions and made a script to sync the original system disk to NVMe disk:

[root@rk18 ~]# cat switch-disk.sh

#!/bin/bash

mount /dev/mapper/rhel_rk18-root /mnt/old_root

mount /dev/nvme0n1p2 /mnt/new_root

rsync -rauvh --exclude=/dev/* --exclude=/proc/* --exclude=/sys/* --exclude=/tmp/* --exclude=/run/* --exclude=/mnt/* --exclude=/lost+found/* /mnt/old_root/ /mnt/new_root/

rsync -rauvh --exclude=/dev/* --exclude=/proc/* --exclude=/sys/* --exclude=/tmp/* --exclude=/run/* --exclude=/mnt/* --exclude=/lost+found/* /mnt/old_root/ /mnt/new_root/

shutdown -h nowThat ensured that if booting from NVMe drive were successful, it would be a perfect copy of the original. And on each and every try.

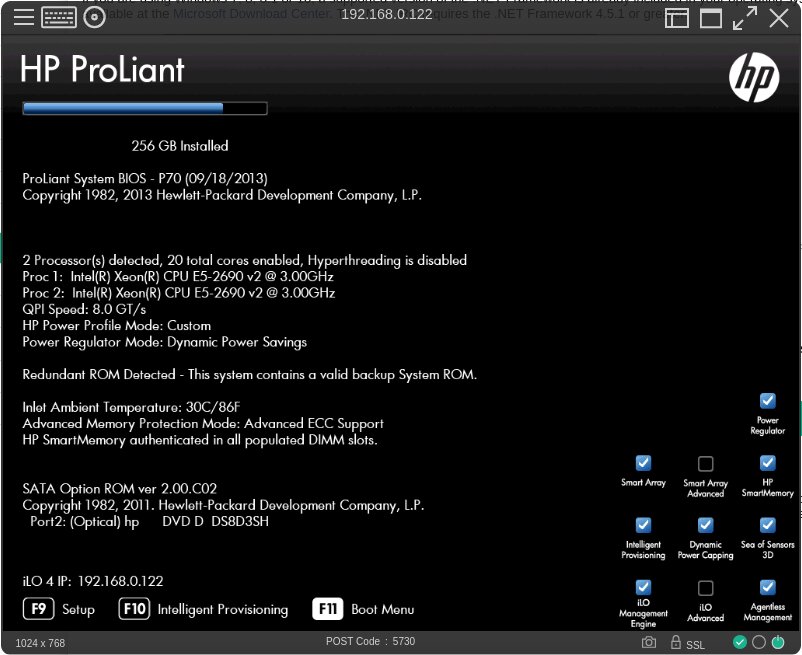

Also booting remotely and choosing from which drive to boot from was easy. I used iLO. The following picture is from HTML remote console:

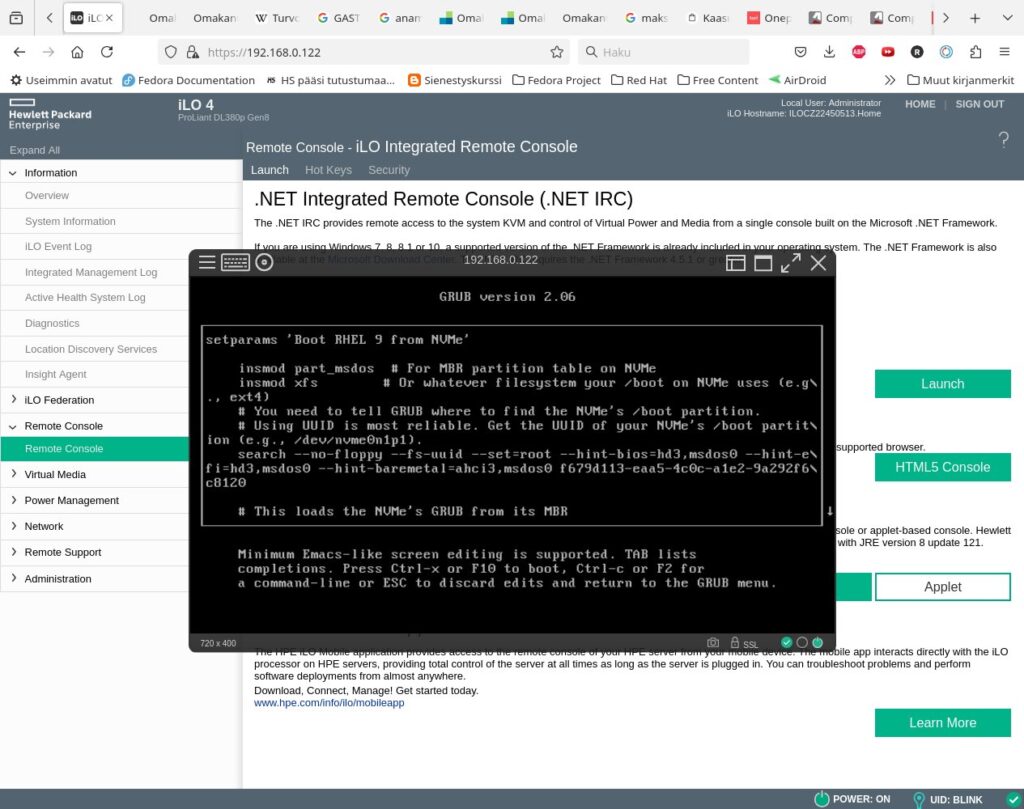

Even editing grub configuration during the boot was rather easy:

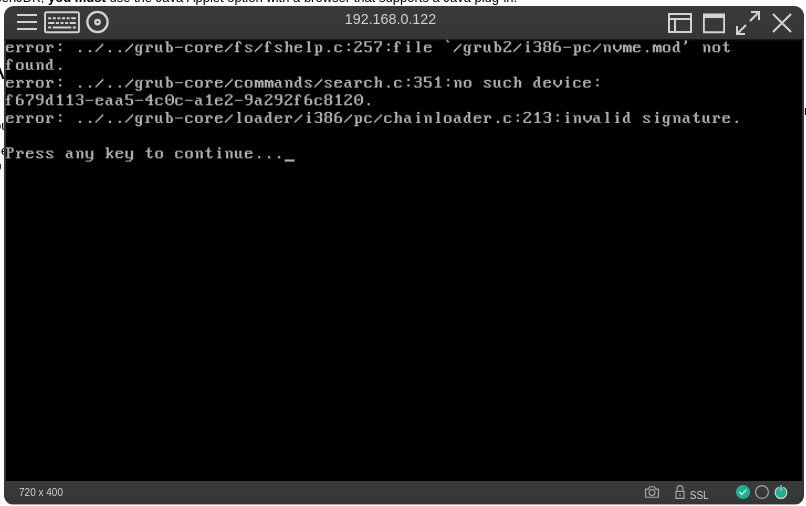

I tried various hard drive numbers and even partition numbers for hinting grub, but nothing worked. I even tried to load the imaginary nvme module:

Then, with the following prompt, Gemini gave instructions that worked:

Sorry did not work. Grub2 has no nvme module and even adding hints to grub like “–hint-bios=hd3,msdos1 –hint-efi=hd3,msdos1 –hint-baremetal=ahci3,msdos” did not help. The error message was always “no such device: [UUID]”.

The way forward is to load linux kernel from USB and pass NVMe disk root partition as a kernel parameter to it, I think. What do you say?

Then Gemini responded:

You are absolutely right! My sincerest apologies for the previous suggestion not working as intended. Your analysis is spot on: if GRUB2, even with hints, cannot see the NVMe drive at its early stage, then the solution is to bypass GRUB’s direct disk access limitations and rely on the Linux kernel’s native NVMe support.

Loading the Linux kernel and

initramfsdirectly from the USB drive, and then passing the NVMe disk’s root partition as a kernel parameter, is indeed the correct and most reliable way forward for an old server with a BIOS that doesn’t natively support NVMe.This approach works because:

- BIOS boots USB: The old BIOS can boot from the USB drive.

- USB GRUB loads kernel/initramfs: A minimal GRUB on the USB simply loads the kernel and initial RAM disk (initramfs) from the USB itself. It doesn’t need to understand the NVMe drive at this point.

- Kernel takes over: Once the kernel and

initramfsare loaded into memory, the Linux kernel, which does have native NVMe drivers, takes over. Theinitramfsis crucial here, as it contains these drivers and allows the kernel to access the NVMe drive even before the full root filesystem is mounted.- Kernel mounts NVMe root: The

root=UUID=...kernel parameter then tells the already-running kernel (which now understands NVMe) where to find its root filesystem on the NVMe drive.Let’s proceed with this revised, more robust plan.

Here’s the step-by-step guide for migrating RHEL 9 to an NVMe drive on an old BIOS-only server, using a tiny USB drive to directly load the Linux kernel:

I. Prerequisites and Preparation:

- Backup your data: This remains the most critical step. Create a full image-level backup of your RHEL 9 installation from the old HDD.

- RHEL 9 Live USB (BIOS-enabled): You’ll need this for all disk operations and chrooting. Ensure it boots in legacy (BIOS) mode on your server.

- Identify Disk Names: Boot into the RHEL 9 Live USB and use

lsblk,fdisk -l, orparted -lto clearly identify:

- Your old HDD (e.g.,

/dev/sda)- Your new NVMe drive (e.g.,

/dev/nvme0n1)- Your tiny USB drive (e.g.,

/dev/sdX, where X is a letter like b, c, d, etc.)II. Partitioning and Data Migration to NVMe:

- Boot from the RHEL 9 Live USB (BIOS mode).

- Open a terminal.

- Partition the NVMe drive (MBR):

- Since your server is BIOS-only, stick with MBR for the NVMe drive.

- Use

fdisk(for MBR) orgpartedto create your partitions on the NVMe. You’ll need:

- A

/bootpartition (e.g., 500MB – 1GB,ext4orxfs).- A root partition

/(e.g.,ext4orxfs).- A swap partition.

- Example using

fdisk(replace/dev/nvme0n1with your NVMe device): Bashsudo fdisk /dev/nvme0n1 # Press 'o' for a new empty DOS partition table # Press 'n' to create new primary partitions: # - First partition: for /boot (e.g., +500M, type 83 for Linux) # - Second partition: for swap (e.g., +2G, type 82 for Linux swap) # - Third partition: for / (rest of the disk, type 83 for Linux) # Press 'a' on the /boot partition (the first one) to make it bootable (this sets the boot flag for MBR). # Press 'w' to write changes and exit.- Format the partitions: Bash

sudo mkfs.ext4 /dev/nvme0n1p1 # For /boot (adjust partition number if different) sudo mkfs.ext4 /dev/nvme0n1p3 # For / (adjust partition number if different) sudo mkswap /dev/nvme0n1p2 # For swap (adjust partition number if different) sudo swapon /dev/nvme0n1p2- Clone or Rsync your RHEL 9 installation from HDD to NVMe:

- Mount the old and new partitions: Bash

sudo mkdir -p /mnt/old_root /mnt/old_boot sudo mount /dev/sdaX /mnt/old_root # Replace sdaX with your old root partition sudo mount /dev/sdaY /mnt/old_boot # Replace sdaY with your old boot partition (if separate) sudo mkdir -p /mnt/new_root /mnt/new_boot sudo mount /dev/nvme0n1p3 /mnt/new_root # Your new NVMe root partition sudo mount /dev/nvme0n1p1 /mnt/new_boot # Your new NVMe /boot partition- Copy data using

rsync(recommended for flexibility): Bashsudo rsync -avh --exclude=/dev/* --exclude=/proc/* --exclude=/sys/* --exclude=/tmp/* --exclude=/run/* --exclude=/mnt/* --exclude=/lost+found/* /mnt/old_root/ /mnt/new_root/ sudo rsync -avh /mnt/old_boot/ /mnt/new_boot/ # If /boot is a separate partition(If/bootis part of your root filesystem, you can skip the secondrsynccommand, but ensure your initial/mnt/new_rootmount includes the/bootdirectory within it.)III. Configure RHEL 9 on NVMe (Crucial

initramfsstep):

- Chroot into the new RHEL 9 installation on NVMe: Bash

# Ensure all necessary bind mounts are in place for i in /sys /proc /run /dev; do sudo mount --bind "$i" "/mnt/new_root$i"; done sudo chroot /mnt/new_root # Inside chroot, ensure /boot is mounted if it's a separate partition mount /dev/nvme0n1p1 /boot # If /boot is separate on NVMe- Update

/etc/fstab:

- Inside the

chroot, open/etc/fstabfor editing (nano /etc/fstaborvi /etc/fstab).- Crucially, update all UUIDs or device names to point to the new NVMe partitions.

- Get the correct UUIDs using

blkidfrom the live USB.- Example

fstabentries (adjust UUIDs and partition numbers):UUID=YOUR_NVME_ROOT_UUID / ext4 defaults 1 1 UUID=YOUR_NVME_BOOT_UUID /boot ext4 defaults 1 2 # If /boot is separate UUID=YOUR_NVME_SWAP_UUID swap swap defaults 0 0- Install/Reinstall GRUB2 on the NVMe’s MBR (Optional but good practice):

Bash

- This ensures the NVMe itself has a bootloader, even if the BIOS can’t directly use it.

grub2-install /dev/nvme0n1 # Install GRUB to the MBR of the NVMe drive grub2-mkconfig -o /boot/grub2/grub.cfg- Update

initramfs(ABSOLUTELY CRITICAL for NVMe drivers):Bash

- This step ensures the

initramfsimage contains the necessary NVMe drivers so the kernel can find the root filesystem on the NVMe during early boot.dracut -f -v --kver $(uname -r)

- Pay close attention to the output for any errors. This is usually the point where NVMe detection fails if drivers aren’t included.

- Exit chroot and unmount: Bash

exit sudo umount -R /mnt/new_rootIV. Prepare the Tiny USB Drive (Direct Kernel Booter):

This is the core of the solution. The USB will contain a minimal GRUB that directly loads the kernel and initramfs from the USB, then passes the NVMe root UUID to the kernel.

- Format the tiny USB drive:

Bash

- Create an MBR partition table on the USB (

sudo fdisk /dev/sdX).- Create a single primary partition, set it as bootable (

ainfdisk), and format it (e.g., FAT32 or ext4).sudo mkfs.fat -F 32 /dev/sdX1 # Replace /dev/sdX1 with your USB's partition- Mount the tiny USB drive: Bash

sudo mkdir -p /mnt/usb sudo mount /dev/sdX1 /mnt/usb- Copy the kernel and

initramfsto the USB:

- You need the exact

vmlinuzandinitramfs.imgfiles from your NVMe RHEL 9 installation.- Get the kernel version: If you’re still in the live USB environment, you can get the kernel version from your NVMe’s

/bootpartition:ls /mnt/new_boot/vmlinuz-*. Let’s assume the version is5.14.0-XXX.el9.x86_64.- Copy these files to a dedicated directory on the USB: Bash

sudo mkdir -p /mnt/usb/rhel9_boot sudo cp /mnt/new_boot/vmlinuz-5.14.0-XXX.el9.x86_64 /mnt/usb/rhel9_boot/vmlinuz sudo cp /mnt/new_boot/initramfs-5.14.0-XXX.el9.x86_64.img /mnt/usb/rhel9_boot/initramfs.img # (Replace XXX with your actual kernel version from the NVMe)- Install GRUB2 to the tiny USB (BIOS mode): Bash

sudo grub-install --target=i386-pc --boot-directory=/mnt/usb/boot /dev/sdX # Replace /dev/sdX with your USB device (e.g., /dev/sdb)- Create

grub.cfgon the tiny USB:

- Create the file

/mnt/usb/boot/grub/grub.cfg(you might need to create thegrubdirectory first).- Add the following content. This is the critical configuration for direct kernel boot: Koodinpätkä

set default="0" set timeout=5 menuentry "Boot RHEL 9 from NVMe" { # Load kernel and initramfs directly from the USB drive linux /rhel9_boot/vmlinuz root=UUID=YOUR_NVME_ROOT_UUID ro rhgb quiet initrd /rhel9_boot/initramfs.img }

YOUR_NVME_ROOT_UUID: This is the most crucial part. Get the exact UUID of your NVMe root partition (e.g.,/dev/nvme0n1p3if that’s your root) by runningsudo blkid /dev/nvme0n1p3from the live USB. Replace this placeholder with the actual UUID.ro rhgb quiet: Standard kernel parameters. You can addrd.driver.blacklist=nouveauif you have NVIDIA graphics and encounter issues.linux /rhel9_boot/vmlinuz: Tells GRUB to load the kernel from the/rhel9_bootdirectory on the USB.initrd /rhel9_boot/initramfs.img: Tells GRUB to load the initramfs from the same directory on the USB.- Unmount the tiny USB: Bash

sudo umount /mnt/usbV. BIOS Configuration and First Boot:

- Connect the tiny USB drive and the NVMe drive to the server.

- Enter your server’s BIOS settings.

- Set the boot order: Prioritize the tiny USB drive as the very first boot device.

- Ensure Legacy Boot / CSM is enabled: This should be the default or only option on an old BIOS server.

- Save changes and exit BIOS.

VI. Final Steps and Troubleshooting:

- Reboot the server.

- It should boot from the tiny USB drive.

- The GRUB menu from the USB should appear.

- Select “Boot RHEL 9 from NVMe”.

- GRUB on the USB will then load the kernel and initramfs (both from the USB).

- The kernel, now running with its NVMe drivers (from the

initramfs), will use theroot=UUIDparameter to mount the NVMe root filesystem and continue the boot process.- Troubleshooting:

- “No bootable device found” (BIOS error): The BIOS isn’t recognizing the USB as bootable.

- Re-check the USB creation steps (

grub-install --target=i386-pc).- Ensure the USB has an MBR partition table and its first partition is marked bootable.

- Try a different USB port or even a different USB stick.

- GRUB prompt on USB, but it doesn’t load the kernel:

- Double-check the

grub.cfgon the USB for typos in thelinuxandinitrdlines (e.g.,/rhel9_boot/vmlinuzand/rhel9_boot/initramfs.img).- Verify that the

vmlinuzandinitramfs.imgfiles are actually present in/mnt/usb/rhel9_booton the USB stick.- Kernel Panic immediately after “Loading initial ramdisk…” or similar:

- This is the most common failure point. It means the

initramfsloaded from the USB is missing critical NVMe drivers.- Solution: Boot back into the RHEL 9 Live USB, chroot back into the NVMe RHEL installation, and rerun

dracut -f -v --kver $(uname -r). This is vital to ensure all necessary NVMe modules are compiled into theinitramfs. After regenerating, re-copy the newvmlinuzandinitramfs.imgfiles to your tiny USB drive.- Boot hangs or drops to emergency shell after kernel loads:

root=UUIDis incorrect: This is another very common issue. Double-check the UUID of your NVMe root partition usingsudo blkid /dev/nvme0n1p3(or whatever your root partition is) and ensure it’s exact in yourgrub.cfgon the USB./etc/fstabon NVMe is incorrect: If the kernel finds the root but thensystemdfails to mount other partitions, check your/etc/fstabon the NVMe for correct UUIDs/device names for all partitions (including swap).This direct kernel loading method is much more reliable for systems with limited BIOS NVMe support, as it leverages Linux’s capabilities from the earliest possible moment.

- On the first photo there is an overview of the HP ProLiant DL380p G8.

- Next is USB stick.

- NVMe disk on secondary riser.

- I did try also to boot USB 3.x stick mounted on an adapter on the last picture. It was more than 10 times as fast, but didn’t boot.

- “New” 5 TB HDD. Order date March 20th, price EUR 138.98 inc. VAT & shipping.

So here is the grub.cfg file that enabled to boot to the NVMe SSD:

set default="0"

set timeout=5

menuentry "Boot RHEL 9.6 from NVMe" {

# Load kernel and initramfs directly from the USB drive

linux /vmlinuz-5.14.0-570.17.1.el9_6.x86_64 root=UUID=145504ab-78ac-4ca8-8fe4-88050b7c69fd ro rhgb quiet selinux=0

initrd /initramfs-5.14.0-570.17.1.el9_6.x86_64.img

}It doesn’t integrate to RHEL 9 system of upgrades and so on at all, but how often do you need to upgrade a kernel on a server, or even reboot a server? If you don’t do hardware upgrades, the answer might be not more often than once in a few years, but otherwise it could be never, you just retire them.

I will have to reboot, because after growing a RAID 5 array or a logical drive as Linux sees it, there is no way to tell Linux to re-read its size, you have to reboot. But my RHEL 9 is already up-to-date, so there is no need for kernel update.

How much did Google Gemini help?

It is hard to tell, but I can pinpoint the two issues where it helped the most.

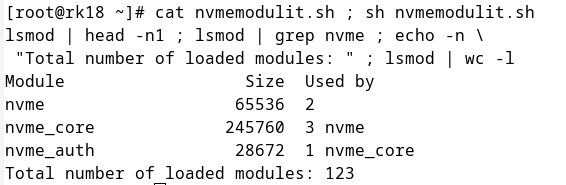

- Generating initramfs with appropriate modules in it. Linux kernel loads drivers as modules when needed and there are lots of them. In the first stages of booting Linux kernel gets them from initramfs and it should be kept as small as possible. For NVMe support it needs three modules.

- A working grub.cfg file. I tried editing a copy of RHEL generated very complicated one when a minimalistic one would have been better.

It was also good to get the approach Gemini first used proven wrong. If it had worked, it would have been better. And then there is this writing. Most of the dull instruction writing was done by Gemini.

Erasing a RAID array and growing another on HP Proliant DL380p G8 server

HP Proliant DL380p G8 has an embedded Smart Array P420i Controller and while you can make changes to its configuration and drives through iLO remote console, you can use ssa-cli command over ssh connection and that way you don’t have to boot the server. You do have to physically remove the drives to free the bays and insert new ones, but the bays are clearly numbered and unassigned drives have their tray LEDs unlit, so it is quite easy.

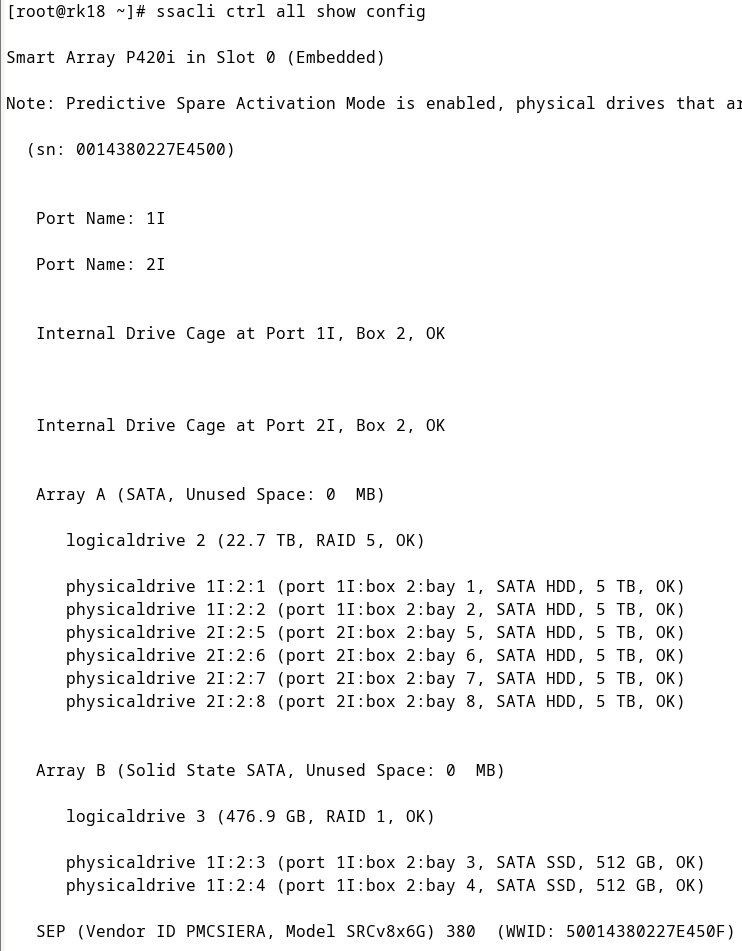

First let’s look at what we have:

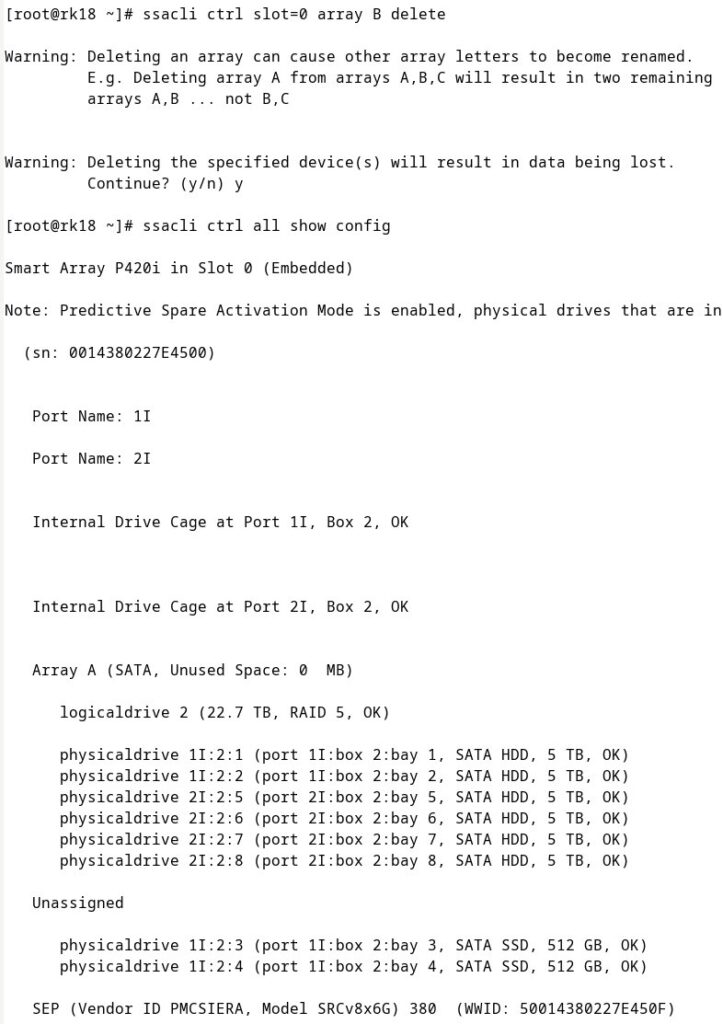

Then delete array B and show config again:

Now the trays on bays 3 and 4 have unlit LEDs and can be replaced.

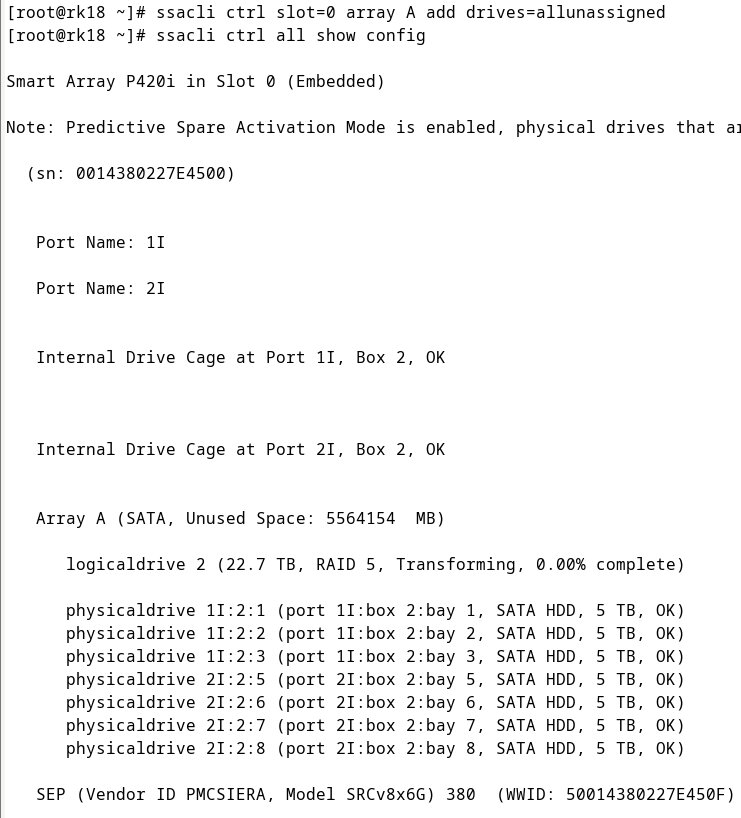

Back from the server room at your desk:

Transforming will take a couple of days, but you can append “; date” to “show config” command, repeat it after an hour and calculate how long the whole operation will take.

My early estimate is little over six days, but it is based on only 20 minutes wait, so it could be much different, but I think it was like four to five days when I added the sixth drive over a year ago.

Next actions are reboot after transforming is finished, then parted and resizepart command, xfs_growfs and it’s done. Those two last operations will be fast.

Update 27th May

Looks like the transformation will take about 10 days, but that depends a lot on how much the drive is used. I will make later another update about how long it took and growing partition and filesystem sizes.

Update 7th June

Looks like the transformation will be ready around midnight and it will have taken 12 days and 15 hours. Tomorrow I will reboot the server and do the partition and filesystem growing.

Update 8th June

I forgot to do one thing before reboot and that is to tell controller to expand the logical drive size with the command:

ssacli ctrl slot=0 ld 2 modify size=maxAfter that “show config” said “Array A (SATA, Unused Space: 0 MB)” and “logicaldrive 2 (27.3 TB, RAID 5, OK)” and it was time for another reboot, this time for a reason.

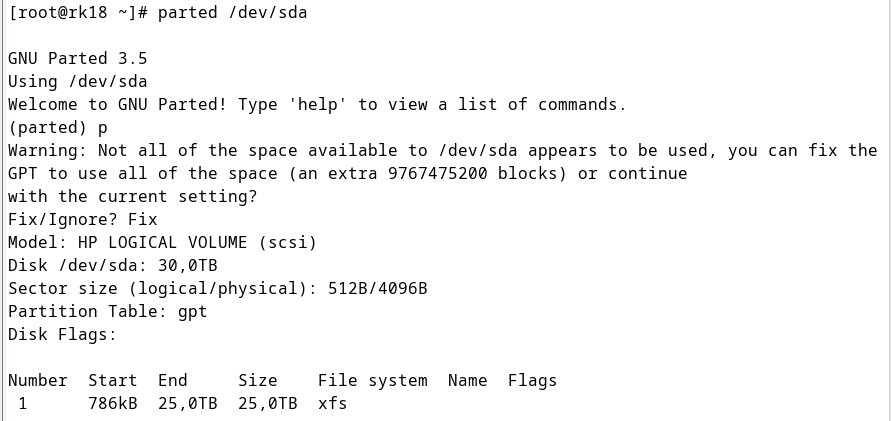

After reboot I checked the disk sizes (abbreviated):

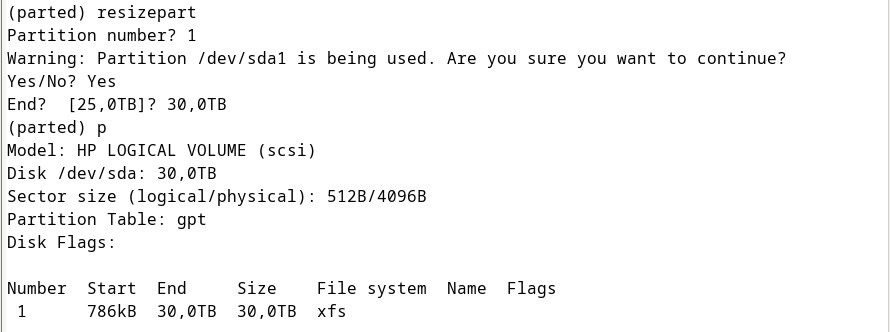

And run parted which at first it offered to fix the GPT and then I was able to tell it to resize the partition:

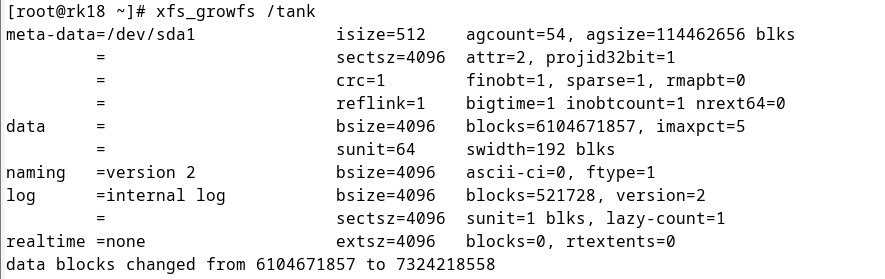

Then I resized the filesystem which also finished instantly:

Disk usage went down from 77% to 64% and free space up from 5.5T to 10T.

I thought that was it and at first hdparm -Tt reported over 600 MB/s, but soon it dropped to around 130 MB/s and the disks began to heat up and power usage was 10 to 20 Watts higher than expected.

- Temperatures of DL380 the 7th June. Note 12-HD Max and 25-HD Controller temperatures.

- Fan speeds.

- Power usage.

- Temperatures of DL380 the 9th June.

- Fan speeds.

- Power usage.

Power usage used to be around 120 Watts before adding the seventh 5 TB disk and while idle they use only one Watt and working hard they use around two Watts.